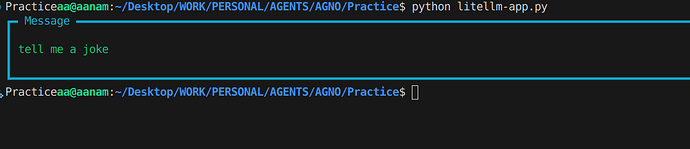

Facing an issue with LiteLLM. With LiteLLM agent not giving any output.

Here is the code

python

from agno.agent import Agent

from agno.models.litellm import LiteLLM

from dotenv import load_dotenv

import os

load_dotenv()

agent = Agent(

model=LiteLLM(

id="ollama/qwen3:latest", # Correct format for Groq through LiteLLM

),

markdown=True,

)

agent.print_response("tell me a joke", stream=True)

Respone